Active Research Projects

-

Verbal Apprentice Learner (VAL)

The Verbal Apprentice Learner (VAL) is a framework for acquiring hierarchical task knowledge from natural dialog. VAL’s semantic parser uses a pre-trained GPT language model to perform individual, highly granular tasks, such as predicate-argument extraction, semantic matching, and argument grounding. The result is a system that can convert natural dialog into formal instructions that are grounded in the operations and objects available in a specific environment. VAL then uses classical learning techniques, like hierarchical task decomposition and argument variablization, to convert these instructions into interpretable, reusable, symbolic knowledge structures.

-

Human-AI Teaming (STRONG)

Humans do team well while AI agents do not. One example of this is the Dota 2 AI Open AI Five: Five AI agents would be in the game as five top Dota 2 players in the world, and they were broken into different teams with other human agents to fight against each other. However, the agents do not collaborate well. The overall goal of the project, STRONG (Strengthening Teamwork for Robust Operations in Novel Groups), is to probe the future of HMT (human-machine teaming) and to develop natural task general human-guided machine learning capabilities for future scenarios of teaming.

-

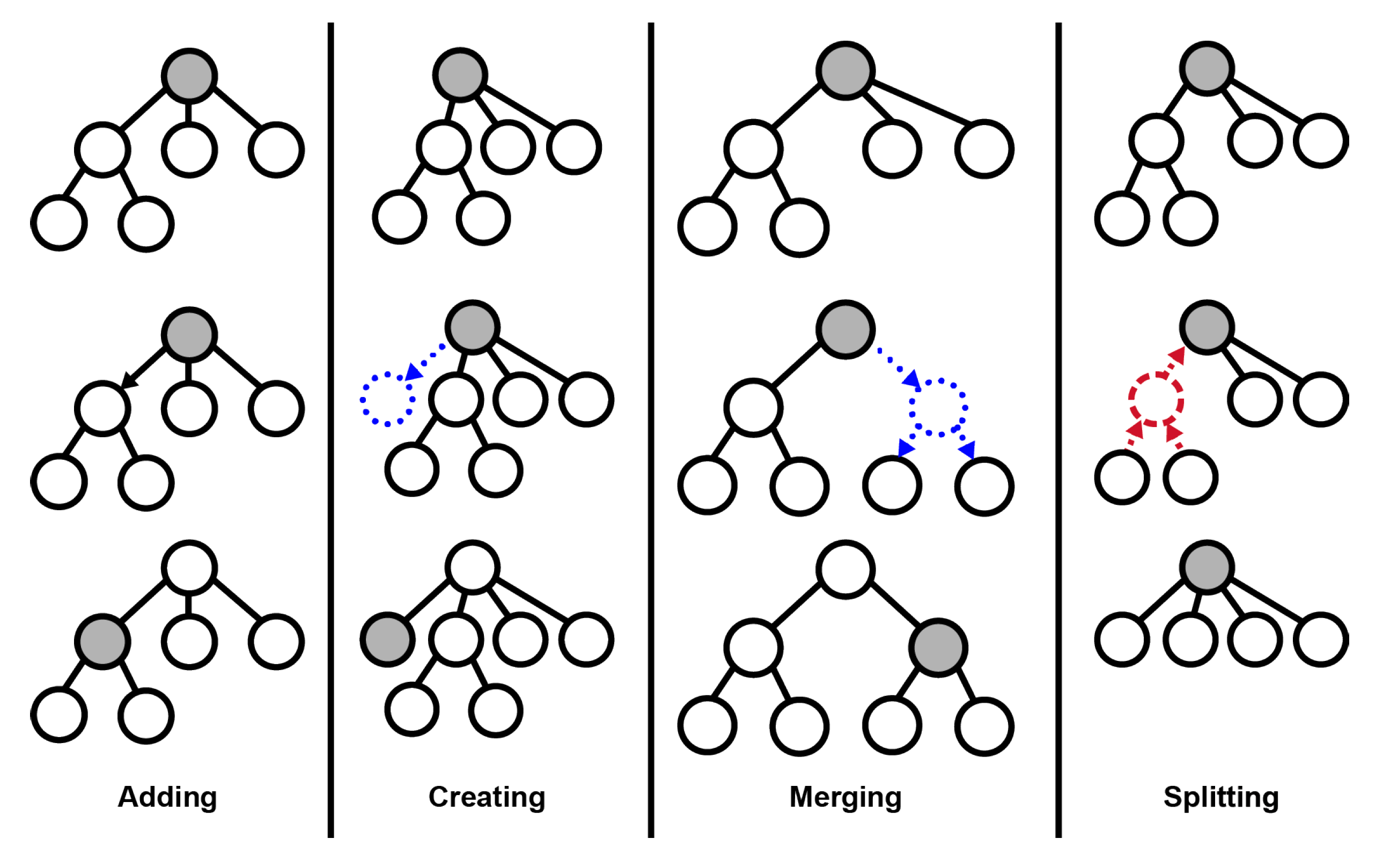

Apprentice Tutor Builder

This project sits at the intersection of HCI and Human-Like AI models and the goal is to design teachable agents using Hierarchical Task Networks (HTNs) that can learn from humans in a multi-modal and incremental way. These apprentice agents are provided primitive operators that define fundamental knowledge of actions the agent can take. These actions contain conditions that define when the actions can be applied given a particular configuration of the task environment. Learning is achieved by constructing methods that solve tasks, decomposing higher-level tasks into subtasks, and refining the conditions on methods to determine when they can be applied. These agents will serve as the underlying AI and be integrated into other ongoing projects, including our Apprentice Tutors and HMT gaming work.

-

Apprentice Tutors

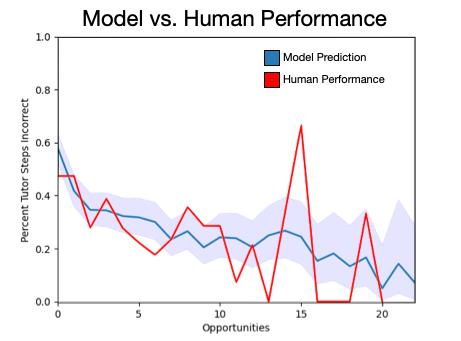

Apprentice tutors is a platform for authoring and deploying tutors capable of providing personalized education of AI concepts at scale. We engineered the Apprentice tutors to provide adaptive problem capabilities using Bayesian Knowledge Tracing (BKT) to engage students with question types targeting knowledge components needing the most improvement. We aim to provide this tutor as a supplement to in-class instruction. Our work showcases the promise of expanding equitable and personalized AI education throughout higher education to enable students to learn in scenarios where a human-AI expert instructor might not be readily available.

-

Cobweb

Cobweb is a clustering model which supports incremental concept learning from fewer examples. The model learns both structure and parameters where the structural expansion is dynamic. It uses multimodal approach which is human like. Future scope is to optimize exisitng model, integrate concept learning with skill learning and organize skills with cobweb.

-

(A)I Can Play Gomoku

Artificial intelligence systems such as AlphaZero have demonstrated their ability to play strategic games at an expert level. However, little research has explored the applications of these algorithms for educational purposes. To explore this gap, we developed a tutor for Gomoku, a strategic game similar to tic-tac-toe. Our tutor can provide users with immediate feedback and hints, and supports users in engaging in after-action reviews. These capabilities are powered by an expert model that we discovered using AlphaZero. We employed this tutor in an experimental study to investigate two research questions: 1) Can Game AI models, which are inhuman in their expertise, provide guidance that improves human learning? 2) How do different types of Game AI derived feedback affect people’s learning? We analyze how participants use this tutor to learn Gomoku and how tutor feedback and its timing affect learning. Our work demonstrates new pedagogical uses for Game AI models.

Past Research Projects

-

POCUS AI

The POCUS AI program will produce code demonstrating the feasibility of automated interpretation of point- of-care ultrasound (POCUS) across multiple applications and will provide a foundation for future work. POCUS can quickly and accurately address a broad range of medical needs directly on the battlefield when in the hands of highly-trained medical personnel. However, a lack of POCUS competency among military medics has led to underutilization. Automated interpretation by artificial intelligence (AI) could significantly reduce training burdens and increase POCUS usage. The POCUS AI program will address this need in a cost-effective manner by advancing AI techniques that use minimal training data and can serve multiple distinct POCUS applications. The algorithms will be demonstrated on four DoD-priority battlefield medical needs: detection of pneumothorax, measurement of optic nerve sheath diameter, nerve block guidance, and verification of endotracheal intubation.

-

Predicting Effects of HPO Interventions with Socio-Cognitive Agents that Leverage Individual Residuals (TAILOR)

Human performance optimization (HPO) is centered around designing tools and interventions that increase human performance. Typically, A/B experiments are used to evaluate alternative HPO interventions to determine which yield the best performance. Unfortunately, A/B experiments are time consuming and costly to run. Further, each new intervention requires an additional A/B experiment to evaluate it, which means that it is often feasible to only evaluate a few intervetions. To overcome these issues, we exploring the use of computational models of humans—or computer models that receive the same stimuli as human A/B experiment participants and then make the same decisions they do—to simulate A/B experiments and support reasoning about about hypothetical and counterfactual HPO interventions. Additionally, we are also exploring how data about specific individuals can be leveraged to create tuned computational models that better approximate and predict the behavior of specific individuals they were tuned to.

-

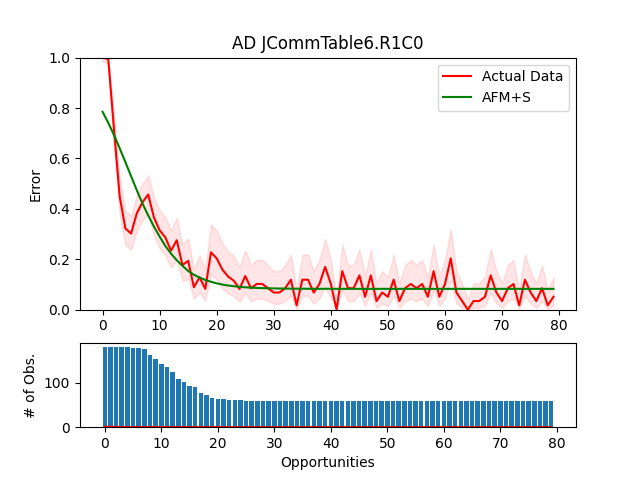

Investigating Knowledge Tracing using Simulated Students

Knowledge tracing algorithms are embedded in Intelligent Tutoring Systems (ITS) to keep track of what students know and do not know, in order to better focus practice. Due to costly in-classroom experiments, we explore the idea of using machine learning models that can simulate students’ learning process. We conduct experiments using such agents generated by Apprentice Learner (AL) Architecture to investigate the online use of different knowledge tracing models (Bayesian Knowledge Tracing and the Streak model). We were able to successfully A/B test these different approaches using simulated students. An analysis of our experimental results revealed an error in the implementation of one of our knowledge tracing models that was not identified in our previous work, suggesting AL agents provide a practical means of evaluating knowledge tracing models prior to more costly classroom deployments. Additionally, our analysis found that there is a positive correlation between the model parameters achieved from human data and the param- eters obtained from simulated learners. This finding suggests that it might be possible to initialize the parameters for knowledge tracing models using simulated data when no human student data is yet available.

-

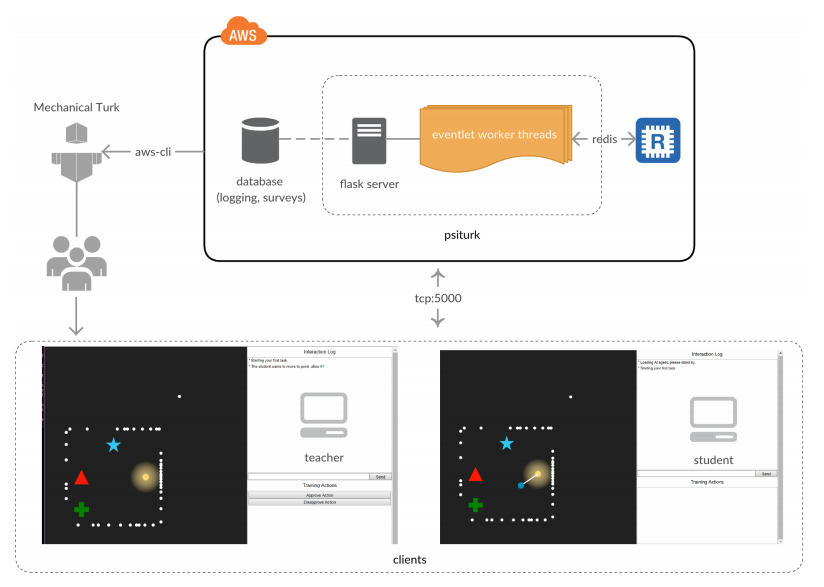

Natural Training Interactions Testbed

Teachable AI (TAI) systems can significantly reduce the burden to create AI systems that empower non-programmers to author AI models. Our goal is the build teachable AI agents that can be taught rather than programmed, however, using natural human interactions. The Natural Training Interaction (NTI) testbed is a system that lets us observe teaching and learning interactions between multiple participants; including humans and AI agents. Studying these interactions will enable us to understand the patterns and modalities that are deemed effective when transferring knowledge between participants. We aim to eventually build TAI systems that utilize these natural interaction patterns - to build human-centered AI technologies.